PAPER

- Kyoungmin Han, Minsik Lee, “EnSiam: Self-Supervised Learning With Ensemble

Representations,” Engineering Applications of Artificial Intelligence, vol. 143, pp. 110007, Mar. 2025. [paper]

ABSTRACT

Recently, contrastive self-supervised learning, where the proximity of representations is determined based on the identities of samples, has made remarkable progress in unsupervised representation learning.

SimSiam is a well-known example in this area, known for its simplicity yet powerful performance. However, it is known to be sensitive to changes in training configurations, such as hyperparameters and augmentation settings, due to its structural characteristics. To address this issue, we focus on the structural similarity between contrastive learning and the teacher-student framework in knowledge distillation. Inspired by the ensemble-based knowledge distillation approach, we propose a new self-supervised learning method, EnSiam, by introducing an ensemble representation in pseudo labels. This can reduce the variance in the contrastive learning procedure, providing better performance. Experiments demonstrate that EnSiam performs better than existing methods in most cases, including those on ImageNet, showing that EnSiam is capable of learning high-quality representations. Furthermore, we extend our approach to various other self-supervised learning methods and empirically confirm that ensemble representations can consistently improve performance in general self-supervised learning.

OVERALL FRAMEWORK

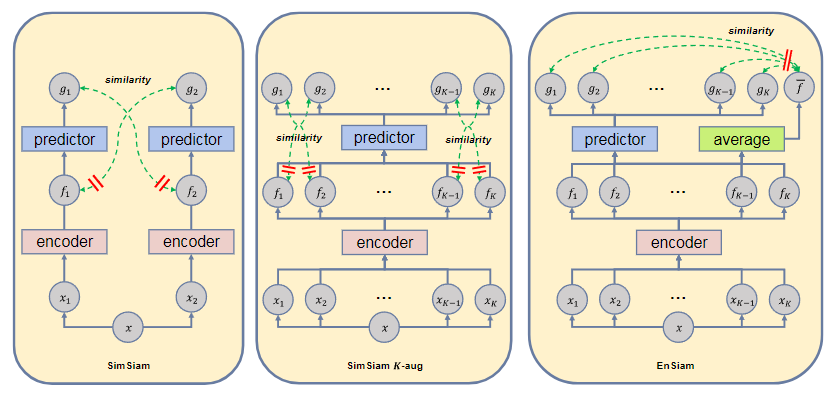

- Structure overview. The leftmost is SimSiam, the middle is a simple variant of SimSiam, and the

rightmost is the proposed method. All three frameworks use stop gradient operations on the branch providing pseudo labels. In case of EnSiam, the stop gradient operation, represented by a red double line, is applied to f , which is the average of f_k . Both EnSiam and SimSiam K-aug generate K samples through augmentation and pass them to the same encoder and predictor. The models are then trained to maximize the similarities described by green dashed lines.