PAPER

- Changho No and Minsik Lee, “Global and local feature communications with transformers for 3D human pose estimation,” Scientific Reports, vol. 15, pp. 6624, Feb. 2025. [paper]

ABSTRACT

Recently, spatiotemporal Transformer structures have been widely applied to the problem of 3D human pose estimation, achieving state-of-the-art performance. Many of these approaches consider a single joint in a single frame as a token, and attention is applied over the tokens in either the same frame or the same trajectory. While this structure is effective for calculating correlations between individual joints, it is too restrictive in that global features such as frames or trajectories are not well communicated. In this paper, we propose GaLFormer to resolve this issue. GaLFormer is composed of local and global Transformer blocks, where the former is based on joint tokens as in the previous methods, while the latter, i.e., global mixing Transformer, mixes all joints existing in a specific range of frames to enforce an inductive bias for feature exchange. These two Transformer blocks are alternately repeated in the proposed method to calculate correlations between joints, shapes, and trajectories. Experiments show that our approach achieves superior or at least competitive performance compared to existing methods on the Human3.6M, MPI-INF-3DHP, and HumanEva datasets.

OVERALL FRAMEWORK

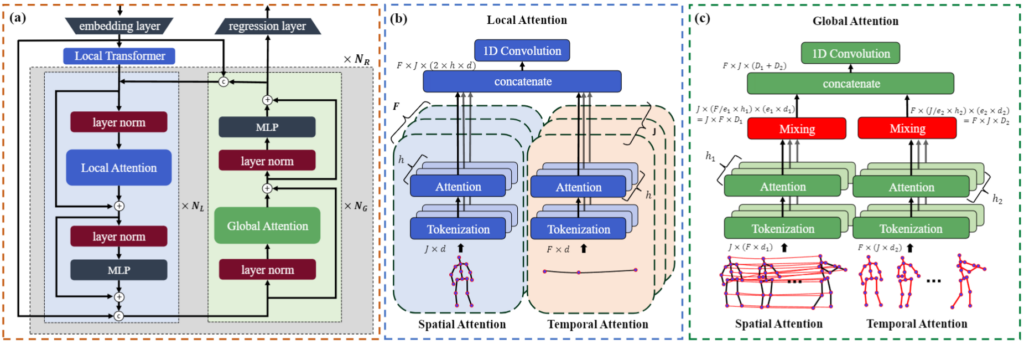

- (a) Overview of the proposed method. Here, The light blue region represents the local Transformer block, while the light green region represents the global Transformer block. (b) Local attention. (c) Global attention. Our method utilizes a structure consisting of NG sets of Transformer Blocks, each containing NL Transformer Blocks with local attention and NG Transformer Blocks with global attention.

QUALITAIVE RESULTS

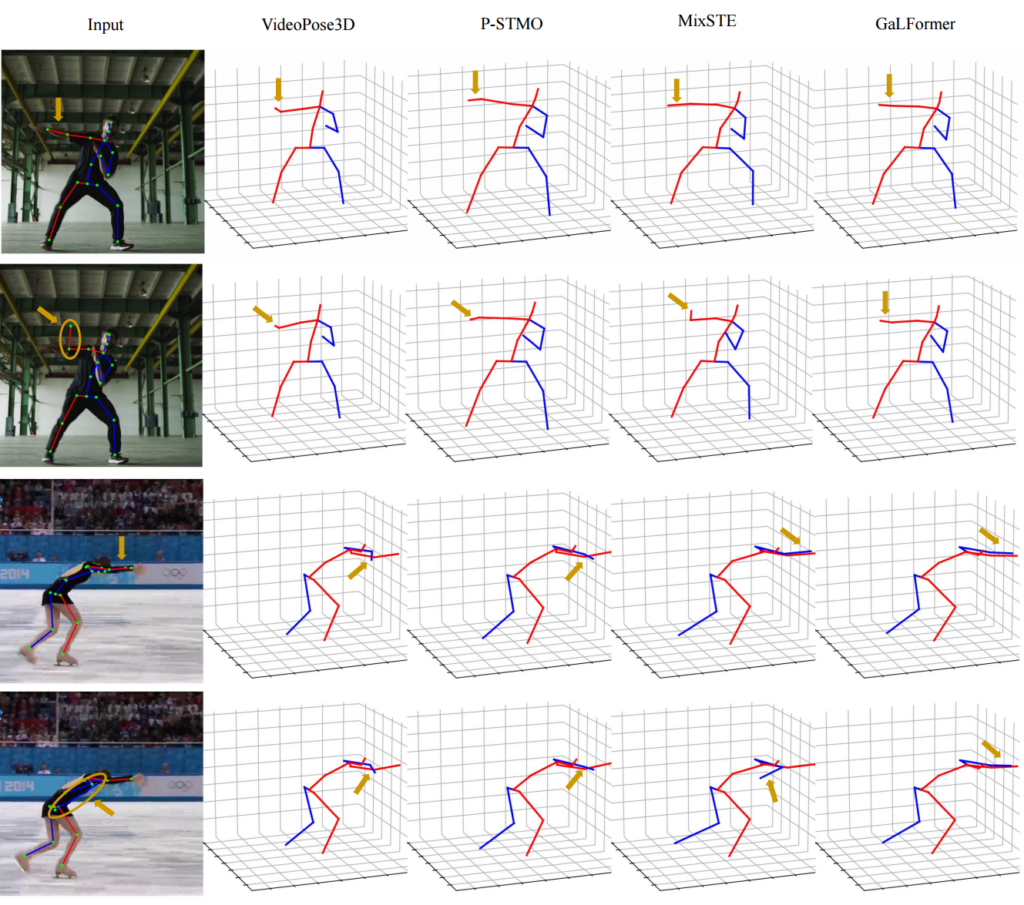

- Qualitative results for wild videos. VideoPose3D, P-STMO, and MixSTE were also applied for comparison. The yellow arrows represent the response of each method to momentary noise.