PAPER

- Sora Kim, Sungho Suh, and Minsik Lee, “RAD: Region-Aware Diffusion Models for Image Inpainting,” IEEE Conf. Computer Vision and Pattern Recognition (CVPR), June. 2025. [paper | code]

ABSTRACT

Diffusion models have achieved remarkable success in image generation, with applications broadening across various domains. Inpainting is one such application that can benefit significantly from diffusion models. Existing methods either hijack the reverse process of a pretrained diffusion model or cast the problem into a larger framework, i.e., conditioned generation. However, these approaches often require nested loops in the generation process or additional components for conditioning. In this paper, we present region-aware diffusion models (RAD) for inpainting with a simple yet effective reformulation of the vanilla diffusion models. RAD utilizes a different noise schedule for each pixel, which allows local regions to be generated asynchronously while considering the global image context. A plain reverse process requires no additional components, enabling RAD to achieve inference time up to 100 times faster than the state-of-the-art approaches. Moreover, we employ low-rank adaptation (LoRA) to fine-tune RAD based on other pretrained diffusion models, reducing computational burdens in training as well. Experiments demonstrated that RAD provides state-of-the-art results both qualitatively and quantitatively, on the FFHQ, LSUN Bedroom, and ImageNet datasets.

OVERALL FRAMEWORK

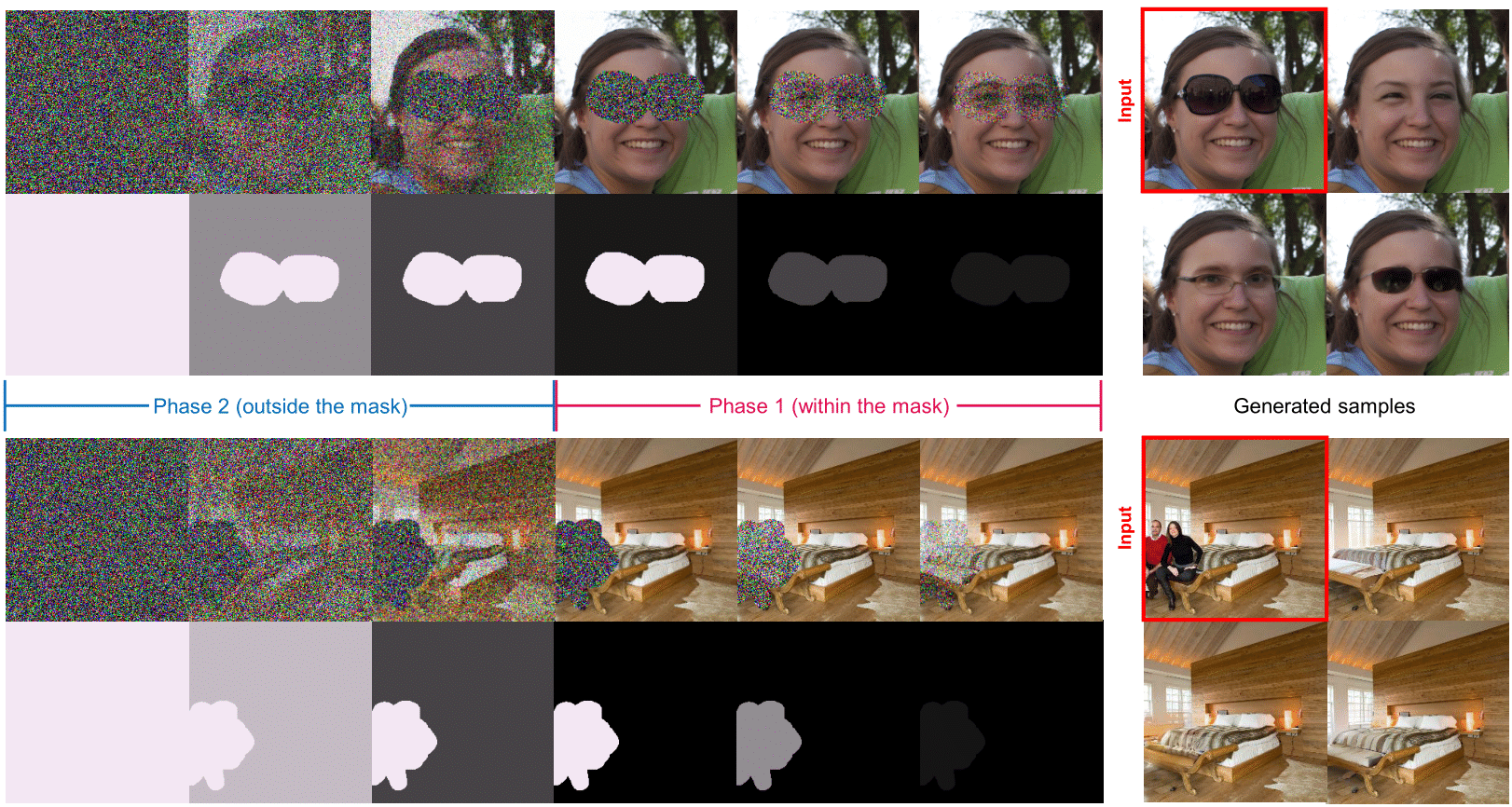

- Region-aware diffusion models (RAD) in action. We divide the entire diffusion process into two phases,

where noise is filled in only for the pixels in a given inpainting mask in Phase 1 and the rest in Phase 2. This adequately represents an inpainting process, where only a part of an image is generated (Phase 1) while the other parts are already present (Phase 2). The order of these phases is set in reverse order because the actual generation is performed in the reverse process. After training, only Phase 1 is utilized

in inpainting because this suffices to generate the mask region.